At a virtual event yesterday kicked off by CEO Satya Nadella, Microsoft introduced Azure Quantum Elements (AQE), a new set of services and tools for quantum chemistry and materials science. It also added to the Microsoft AI Copilot family with “Copilot in Azure Quantum”, a GPT-4 based LLM tool to assist quantum researchers. Lastly, Microsoft broadly reviewed its quantum technology roadmap and introduced a new metric it calls reliable Quantum Operations Per Second (rQOPS in Microsoft parlance).

Nadella said, “Today, we are bringing together AI and quantum for the first time with the announcement of Azure Quantum Elements [and] are ushering in a new era of scientific discovery. Azure Quantum Elements is the first of its kind, comprehensive system for computational chemistry and material science. It applies our underlying breakthroughs in supercomputing, AI and quantum to open incredible new possibilities and take scientific discovery to a complete new level. We are also announcing Copilot in Azure Quantum so scientists can use natural language to reason or some of the most complex chemistry and material science challenges.

“Imagine using natural language to generate code to help model the electronic structure of a complex molecule and predict its exact properties. Or imagine simply describing the scientific problem you’re trying to solve and having the system configure the underlying software needed on the best hardware to run it. Just like GitHub Copilot is transforming software development helping developers write better and faster code, our ambition is that Copilot and Azure Quantum will have [a] similar impact on the scientific process. Our goal is to compress the next 250 years of chemistry and material science progress into the next 25. And of course, Azure Quantum Elements is only the first step as you prepare for an even bigger transformation ahead of us [with] quantum supercomputing.”

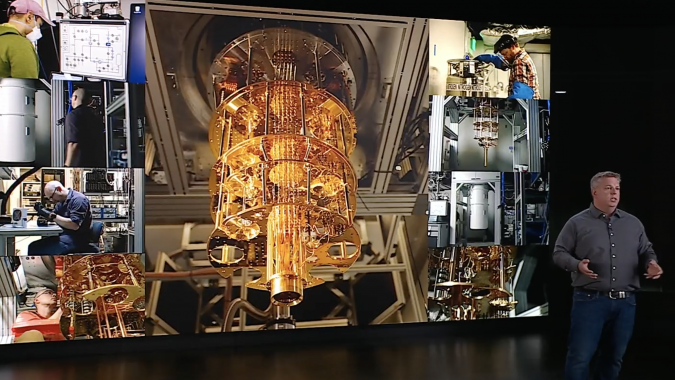

Microsoft’s big bets on AI and quantum computing were on full display. The entire event was a well-polished, tightly-scripted video with a number of senior Microsoft/Azure execs including, Nadella; Jason Zander, EVP, strategic missions and technologies; Nihit Pokhrel, senior applied scientist; Krysta Svore, general manager, quantum; Brad Smith, vice chair and president; and Matthias Troyer, technical fellow and corporate vice president of quantum.

One quantum analyst welcomed Microsoft’s latest work but cautioned against expecting too much, too soon.

Heather West, research manager, quantum computing infrastructure systems, platforms, and technology, said, “With advancements in quantum hardware and software development, as well as new error mitigation and suppression techniques, the ability to gain a near-term advantage may be approaching faster than what many expected, including Microsoft. While Microsoft’s announcements will certainly contribute to this achievement, Microsoft’s roadmap to developing a quantum supercomputer and its rQOPS metric for measuring a quantum supercomputer’s performance seems more aspirational than achievable given how they are still in the very early stages of their qubit development.”

The main new offering, Azure Quantum Elements (AQE), is available now in private preview but presumably will become more broadly available. Microsoft says there have been handful of early users, among them for example, BSF, Johnson Matthey, and SCGC. As a big investor on OpenAI, Microsoft has ready access to underlying GPT technology and will likely create many domain-specific versions. The GitHub Copilot was first released in 2021. Just this March, the company released a business productivity tool, Microsoft 365 Copilot.

Zander has written a blog summarizing yesterday’s announcements. Near-term, AQE and the related Copilot tool are perhaps more likely to drive Azure HPC resources than quantum exploration, although Azure provides ready access to a variety of tools and NISQ (noisy intermediate scale quantum) system including, for example, Rigetti, IonQ, Quantinuum, QCI, Pasqal, and Toshiba.

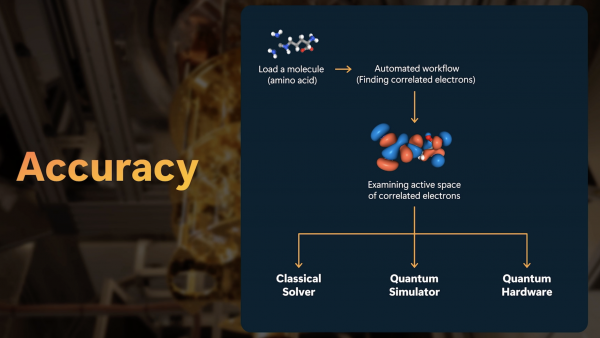

“[AQE] includes many popular open source and third party tools. In addition, scientists can use Microsoft specialized tools for automated reaction exploration to perform chemistry simulations with greater scale,” said Zander yesterday. “[AQE] also incorporates Microsoft’s specialized AI models for chemistry, on which we train on millions of chemistry and material data points. We base these models on the same breakthrough technologies that you see in generative AI. However, instead of reasoning on top of human language, they reason on top of the language of nature, chemistry, we are using those models to speed up certain chemistry simulations by half a million times.”

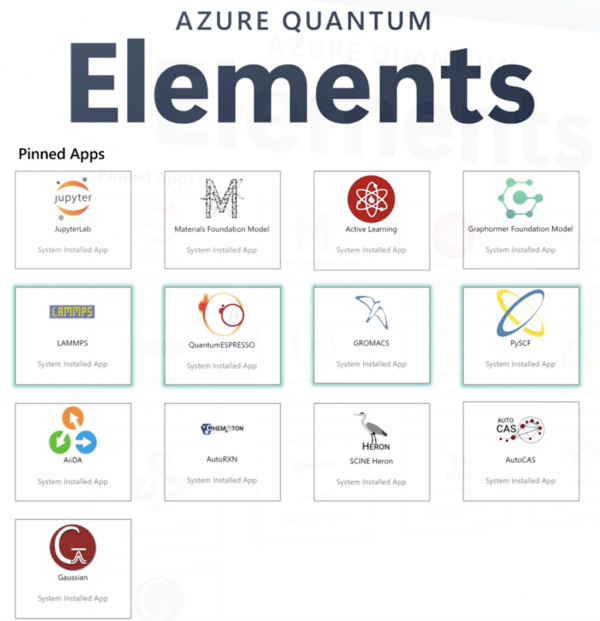

There’s been an emphasis on retaining relative ease-of-use and familiar interfaces such as Jupyter notebooks. Pokhrel, gave a brief demonstration and said, “Most customers will start at our [AQE] custom user portal. Here, they can quickly get access to several widely used computational packages optimized for Azure hardware. Customers also have access to new custom AI power tools developed by Microsoft. Tying it all together are the workflow tools that enable scientists to automate and scale their discovery pipelines.”

Microsoft says AQE will enable users to:

- “Accelerate time to impact, with some customers seeing a six-month to one-week speedup from project kick-off to solution.

- “Explore more materials, with the potential to scale from thousands of candidates to tens of millions.

- “Speed up certain chemistry simulations by 500,000 times, effectively compressing nearly one year of research into one minute.

- “Improve productivity with Copilot in Azure Quantum Elements to query and visualize data, write code, and initiate simulations.

- “Get ready for quantum computing by addressing quantum chemistry problems today with AI & HPC, while experimenting with existing quantum hardware and getting priority access to Microsoft’s quantum supercomputer in the future.

- “Save time and money by accelerating R&D pipeline and bringing innovative products to market more quickly.”

During the virtual roll-out, Helmut Winterling, BSF senior vice president, digitalization, automation, and innovation management, said, “Quantum Chemistry offers invaluable insights to boost the related r&d work. However, this requires a huge amount of computing power. And even then, many problems cannot yet be solved even on the largest computers in the world. By integrating our existing computing infrastructure. With Azure quantum elements in the cloud, our team will be able to further push the limits for in silico development. This includes for instance, chemists and other inorganic materials.”

One area Microsoft hopes Copilot in Azure Quantum will be successful is in training.

Svore noted, “We need people who get chemistry and material science to learn quantum. We need people who get quantum to learn chemistry and material science. Moreover, we need to grow the community tenfold together. The idea of empowering [researchers] is the motivating force behind Azure Quantum, and in particular, the new Copilot in Azure Quantum. This GPT-4 based Copilot is augmented with additional data related to quantum computing, chemistry and material science. It helps people learn quantum and chemistry concepts, and write code for today’s quantum computers. All in a fully integrated browser experience for free. No Azure subscription needed, you can ask Copilot questions about quantum, you can ask it to develop and compile quantum code right in the browser.”

On the broader question of Microsoft’s quantum technology roadmap, there were few details presented. Svore gave the bulk of this presentation. There are many qubit types being developed – superconducting, trapped ion, diamond/nitrogen vacancy, photonic, etc. So far, they are all too prone to error. There seems to be consensus among most major players that at least a million error-corrected logical qubits will be needed for quantum computers to fulfill expectations. Microsoft also believes this.

Microsoft and a few others are betting on something called topological qubits that are inherently resistant to error. Microsoft’s approach depends on a mysterious quasi-particle – the Majorana – that has been hard to pin down. Discussing its merits is a technical topic for another time. There’s also a fair amount of agreement in quantum community that if one could, practically, create and harness topological qubits, it would solve many challenges facing today’s NISQ systems. The Quantum Science Center, one of the the U.S. National Quantum Information Research Centers (based at Oak Ridge National Laboratory), for example, is actively pursuing topological qubit development.

Svore said, “To create this new qubit, we first needed a major physics breakthrough, and I’m proud to say we’ve achieved it, and the results were just published in the journal by the American Physical Society[i]. We can now create and control Majorana. It’s akin to inventing steel, leading to the launch of the Industrial Revolution. This achievement clears the path to the next milestone, a hardware protected qubit that can scale, which we’re engineering right now. The third milestone is to compute with the hardware protected qubits, through entanglement and braiding. The fourth milestone is to create a multi qubit system that can execute a variety of early quantum algorithms.

“At the fifth milestone (slide above), a fundamental step change occurs. As Jason shared, our industry needs to advance beyond NISQ. This is the moment that happens for Microsoft. We will have logical qubits for the first time and a resilient quantum system. Once we have these reliable logical qubits, we are able to engineer a quantum supercomputer, delivering on our sixth milestone – unlocked solutions that have never been accessible before, solutions that are intractable on classical computers. Executing on this path will be transformative, not just for our industry, but for humanity.”

It’s probably fair to call the roadmap directional. Microsoft has not said much about its hardware development efforts and the proposing of a new metric – reliable Quantum Operations Per Second (rQOPS) – seems a bit early. As both Zander and Svore noted in their comments, there are many basic science and engineering challenges ahead.

Svore said, “Today, NISQ machines are measured by counting their physical qubits, or quantum volume (QV, an IBM-developed quality metric). But for a quantum supercomputer, measuring performance will be all about understanding how reliable the system will be, for solving real problems. To solve valuable scientific problems, the first quantum supercomputer will need to deliver at least 1 million reliable quantum operations per second. With an error rate of at most, only one every trillion operations. Our industry as a whole is yet to achieve this goal. As we advance towards a quantum supercomputer.”

About rQOPS, Zander said, “We’re offering a new performance measurement called reliable quantum operations per second or rQOPS. Since today’s quantum computers are all in level one (see slide), they all have an rQOPS of zero. Once we can produce logical qubits, we need to create machines that can solve problems that are unsolvable with classical computers. This will happen at 1 million rQOPS. The crowning achievement will be a general purpose programmable quantum supercomputer. This is the computer that scientists will use to solve many of the most complex problems facing our society”

It’s a grand vision. There are challenges ahead. It’s probably worth noting that Microsoft’s Troyer and two ETH colleagues published a cautionary paper – Disentangling Hype from Practicality: On Realistically Achieving Quantum Advantage. Nevertheless, Microsoft seems to be ramping up its quantum push.

Stay tuned.

Link to Zander blog, https://blogs.microsoft.com/blog/2023/06/21/accelerating-scientific-discovery-with-azure-quantum/

Link to Microsoft paper (InAs-Al hybrid devices passing the topological gap protocol), https://journals.aps.org/prb/abstract/10.1103/PhysRevB.107.245423

[i] Abstract from paper, InAs-Al hybrid devices passing the topological gap protocol, Physical Review B, published 21 June 2023

“We present measurements and simulations of semiconductor-superconductor heterostructure devices that are consistent with the observation of topological superconductivity and Majorana zero modes. The devices are fabricated from high-mobility two-dimensional electron gases in which quasi-one-dimensional wires are defined by electrostatic gates. These devices enable measurements of local and nonlocal transport properties and have been optimized via extensive simulations to ensure robustness against nonuniformity and disorder. Our main result is that several devices, fabricated according to the design’s engineering specifications, have passed the topological gap protocol defined in Pikulin et al. (arXiv:2103.12217). This protocol is a stringent test composed of a sequence of three-terminal local and nonlocal transport measurements performed while varying the magnetic field, semiconductor electron density, and junction transparencies.

“Passing the protocol indicates a high probability of detection of a topological phase hosting Majorana zero modes as determined by large-scale disorder simulations. Our experimental results are consistent with a quantum phase transition into a topological superconducting phase that extends over several hundred millitesla in magnetic field and several millivolts in gate voltage, corresponding to approximately one hundred microelectronvolts in Zeeman energy and chemical potential in the semiconducting wire. These regions feature a closing and reopening of the bulk gap, with simultaneous zero-bias conductance peaks at both ends of the devices that withstand changes in the junction transparencies. The extracted maximum topological gaps in our devices are 20–60µeV. This demonstration is a prerequisite for experiments involving fusion and braiding of Majorana zero modes.”