- Home

- Artificial Intelligence and Machine Learning

- AI - Azure AI services Blog

- Enable GPT failover with Azure OpenAI and Azure API Management

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The year is 2024. It is 2pm in New York City on a cold and gloomy Monday. The CTO of a Wall Street hedge fund is reviewing the go-live plan for her new GenAI application. It will allow the firm’s analysts to produce reports in a fraction of the time. At the same time in Palo Alto, a series A funded startup just made a breakthrough leveraging GenAI to improve the accuracy of medical diagnoses. 3 hours from now, a computer science student in London will launch a chatbot to help his classmates optimize their schedules.

Although they are on different paths, these three individuals have this in common that they will soon have to operate a GenAI application in production with demanding users who expect flawless uptime and high quality of service.

Things fail all the time: marriages, hard drives, power supplies, entire data centers... But failure is not the only thing to keep in mind when designing an application for high availability. Success is another. What if your application becomes so popular that it ends up being the victim of its own success? What if your users put so much stress on the Large Language Model (LLM) deployment that your application gets throttled? Wouldn’t it be nice to be able to catch these events and reroute requests to another deployment of the LLM seamlessly?

<ominous music> Enter Azure Open AI and Azure API Management. </ominous music>

Azure OpenAI Service provides REST API access to OpenAI's powerful language models including the GPT-4, GPT-4 Turbo with Vision, GPT-3.5-Turbo, and Embeddings model series. Azure API Management is a hybrid, multicloud management platform for APIs across all environments. Azure OpenAI and Azure API Management combined, allow builders to add routing and rerouting capabilities to their GPT backed applications. The use cases are numerous:

- Quota increases above and beyond a single deployment

- Provisioned Throughput Units (PTU) to Pay-as-you-go failover/spillover

- Geo redundancy

- Intelligent routing of requests to the most relevant model

To illustrate the concept, this blog post focuses on handling a throttling event sent by the Azure Open AI backend and rerouting the request to another Azure Open AI deployment seamlessly using Azure API Management.

Initial setup

The starting point for this exercise is an Azure Open AI deployment. In the console, I decided to create a deployment of gpt-35-turbo that I call “micro”; it can initially handle 40 thousand tokens per minute (TPM). I use Postman as my tool of choice to send API calls to my deployment but cURL or the SDK of your choice would be equally good choices. To prove that the initial setup is working properly, I sent a POST to my Azure Open AI deployment and as expected I received a 200 OK message with the content corresponding to my request. Everything is working.

I can also see that I consumed a little over 300 tokens with that call.

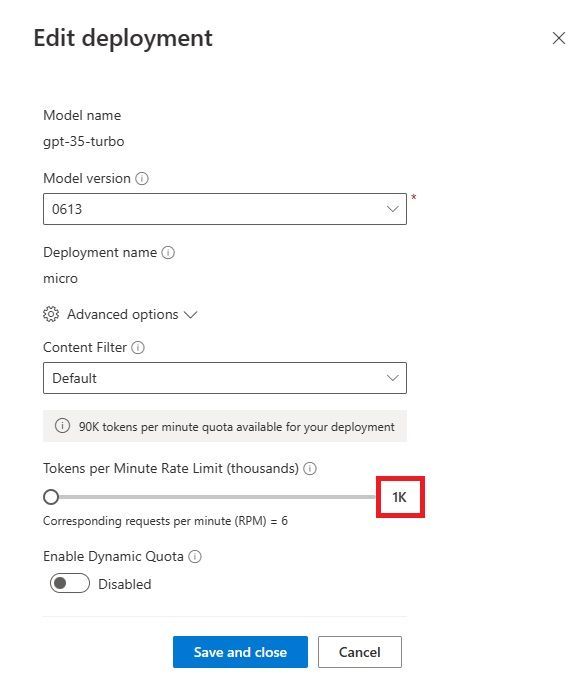

Now let’s go to our Azure Open AI deployment and lower our Tokens per Minute Rate Limit from 40k down to 1k.

We now have an API call that we know consumes about 300 tokens and a model that can process 1,000 tokens per minute.

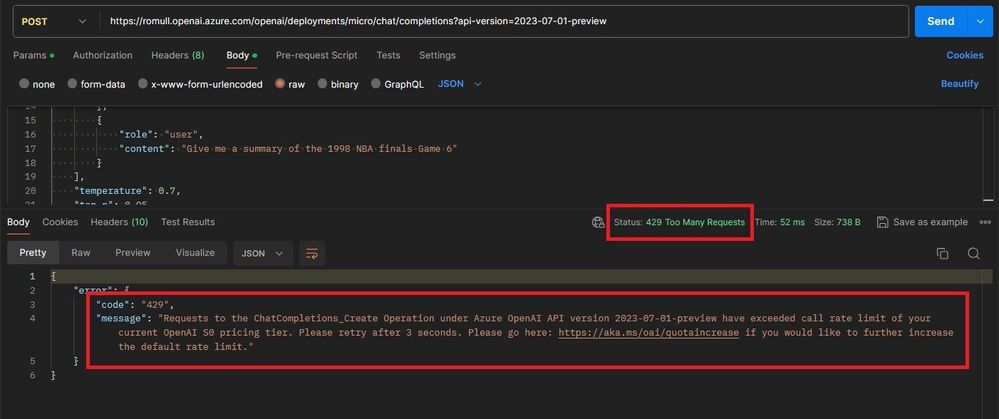

I am no rocket scientist, but I will wager that if we make that same API call a couple times in a short period, we will likely get throttled. And indeed, instead of receiving the 200 OK message, we receive a 429 Too Many Requests message with an explicit body message stating:

“Requests to the ChatCompletions_Create Operation under Azure OpenAI API version 2023-07-01-preview have exceeded token rate limit […]”.

And with that, we have a great starting point. Let’s see how we can intercept that 429 error message and reroute our requests to a failover Azure Open AI deployment instead.

The failover Azure Open AI deployment

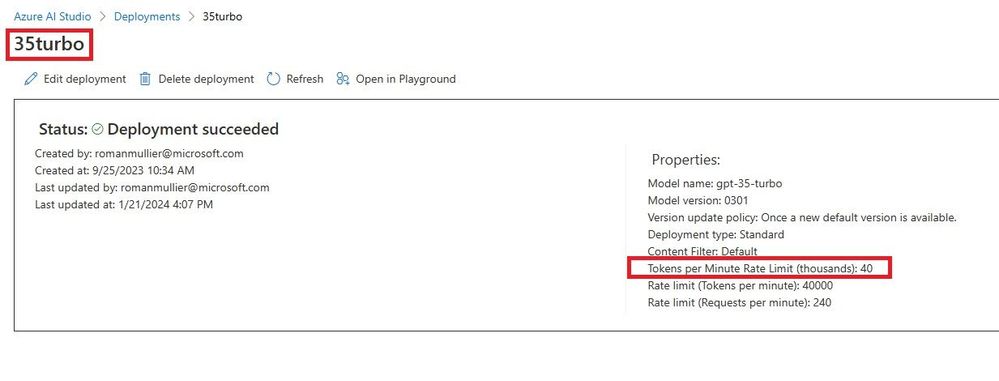

Keeping in mind that we called our primary deployment “micro”, let’s go ahead and create another deployment under the same Azure Open AI resource and call this one “35turbo”. Let’s set the Tokens per Minute Rate Limit to 40 thousand which should be more than enough to absorb the overflow requests should our 1k TPM primary deployment get overwhelmed. Let’s also keep in mind that because we use the same resource, our two deployments can conveniently share the same API key.

The API Management layer

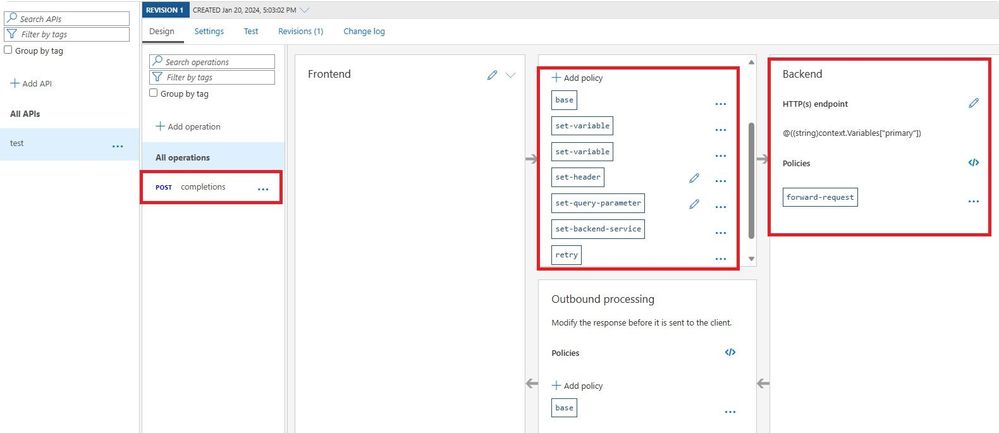

Instead of calling our Azure Open AI endpoint directly, we will now call Azure API Management and configure that layer to monitor the response coming back from the “micro” backend. Should this response be a 429 error message, our configuration will dictate that a retry shall be sent to our “35turbo” backend.

We create a “test” API and within, we create a resource called “completions” which will accept the POST verb. This will allow us in Postman, to send our POST requests to https://<api-url>/completions.

We can now create a policy which will take the user’s incoming request and transform it before sending it to the backend. The url will be remapped to the one of our Azure Open AI “micro” deployment. At the very least, we also need to add the api version as a query parameter and the api key as a header.

The policies can be expressed as the below single XML document:

<policies>

<inbound>

<base />

<set-variable name="primary" value="https://romull.openai.azure.com/openai/deployments/micro/chat/" />

<set-variable name="secondary" value="https://romull.openai.azure.com/openai/deployments/35turbo/chat/" />

<set-variable name="body" value="@(context.Request.Body.As<string>(preserveContent: true))" />

<set-header name="Api-Key" exists-action="override">

<value>API KEY REDACTED</value>

</set-header>

<set-query-parameter name="api-version" exists-action="override">

<value>2023-07-01-preview</value>

</set-query-parameter>

<set-backend-service base-url="@((string)context.Variables["primary"])" />

</inbound>

<backend>

<retry condition="@((int)context.Response.StatusCode == 429)" count="1" interval="1" first-fast-retry="true">

<choose>

<when condition="@(context.Response != null && (context.Response.StatusCode == 429))">

<set-backend-service base-url="@((string)context.Variables["secondary"])" />

<set-body>@((string)context.Variables["body"])</set-body>

</when>

</choose>

<forward-request />

</retry>

</backend>

<outbound>

<base />

</outbound>

<on-error>

<base />

</on-error>

</policies>

The above policy states that upon receiving an inbound request, the query parameter and header be added. In addition, it declares two variables, one for each of our backend urls, named primary and secondary respectively. Furthermore, the policy states that the backend url should be set to the primary by default and should a 429 error be received, that a retry be sent to the secondary.

Testing

In Postman, I modify my request to now call the Azure API Management layer as opposed to the Azure Open AI backend directly; I can also remove my api version parameter and api key header since Azure API Management will act as a proxy and add those for me. Note that if Azure API Management is now in charge of authenticating calls to Azure Open AI, you are in turn responsible for authenticating calls to Azure API Management, otherwise you have just opened your Azure Open AI deployment to the world.

Let’s make a few back-to-back calls to our API in a short period of time.

Unlike with our previous setup, I am now unable to trigger the 429 error message. This leads me to think that we successfully implemented failover on throttling. Trust me. But verify. The tracing capabilities within Azure API Management allow us to follow the call flow step by step and in our case to see that the backend was successfully swapped from primary to secondary.

Conclusion

The Microsoft Azure platform provides powerful yet simple blocks that can be assembled to build fast, performant and resilient applications. This is why builders love it. If Azure Open AI is already the preferred cloud based GenAI service for many reasons, I hope that this post helps highlight how Azure API Management on top of Azure Open AI can unlock an even higher availability.

Much brotherly love to my colleague Brady Leavitt for his guidance and peer review

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.